What does one do when an AI decides the kind of person you are? What you are, and are not allowed to do?

Before 14 April 2022, X (Twitter) was on its way to becoming a government propaganda tool. It appeared to many that certain messages were being “approved” by the White House and others censored. As much as the Management at the time denied it the lived experience of users was very different. Left-wing agitators are given a free pass while right-wing ones were banned on a whim.

And in other news – Mark Zuckerberg came clean and admitted that Facebook had been pressured to censor content.

Many applauded when Elon Musk stepped in to save free speech, while some sought to shut him down.

Elon Musk embraced AI, reduced staff and life went on as normal, or had it?

On Twitter people moved on to fighting about bears while we have slowly been incorporating AI into our lives. Companies see profit opportunities through better stock and people management. Governments see control opportunities through social credit scores and 15-minute cities.

My experience has shown that it may not go as intended when left to AI, which is currently more artificial than intelligent. The innocent can very well be caught up with the guilty and with no one to appeal to where does it leave the little man without a voice?

X (Twitter) is arguably the world’s largest free speech platform, the town hall as it were. It is where people can share ideas and challenge them. As far as free speech goes it’s about as good as it gets. Anyone can share a thought or idea, even if no one is listening.

About me

Before we delve into whether I deserve my punishment, there are a few things you should know about what my ethos was on X.

Some have called me a troll, the fact is if you were able to look through my history you would see that I challenge people’s sometimes quite silly and other times outright stupid notions of the world. I have a few pet hates and a couple of go-to statements that are intended to stimulate conversation.

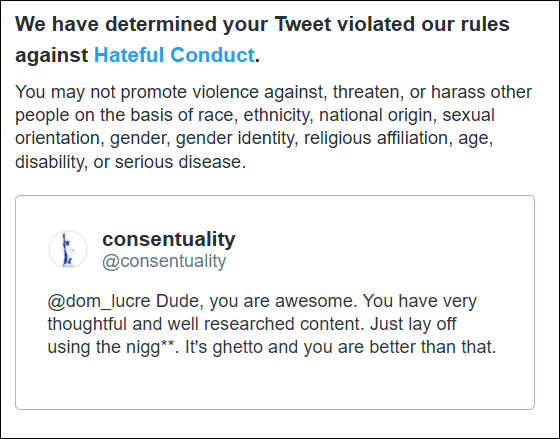

Racist language

My number one hate is the word nigger, people who use it tend to be trolls or are trying to illicit some racial hatred. In all my dealings on X the people I have found who use it the most are more often than not black folk calling out other black folk. Where I use it I mirror the energy of the original poster, so yes I use “the word” in full when talking about “the word”. It’s silly that we cannot discuss “the word” as if it’s some boogeyman.

If a white guy happened to call a black guy nigga we can expect there to be a fight, even though nigga is considered a term of endearment and is not the same as a nigger which is a racial slur. Yet white people are frequently labelled racist for using nigga when black folk are not. My argument in these cases is that this disparity in treatment between white and black people for using the same language is racism.

Language changes

language changes and, words take on new meanings or an additional meaning gets attributed to a word. It is usually easy to determine which based on the context the words are used in. My favourite words for pointing this out are faggot and gay. Both are good English words, one adopted by the gay community and one used as a derogatory term and yet both have well-established good English meanings not related to sex.

How I got banned from X.

One could argue that I was stupid and held the wrong person to account in the wrong way, this is probably true. However, I believe that there was a build-up to this. A series of false flags the AI recorded against my account, a social score if you will, that determines whether one is allowed to participate in the town hall or not.

Let’s dig into what Elon Musk’s AI will use to determine if you are violent or hateful.

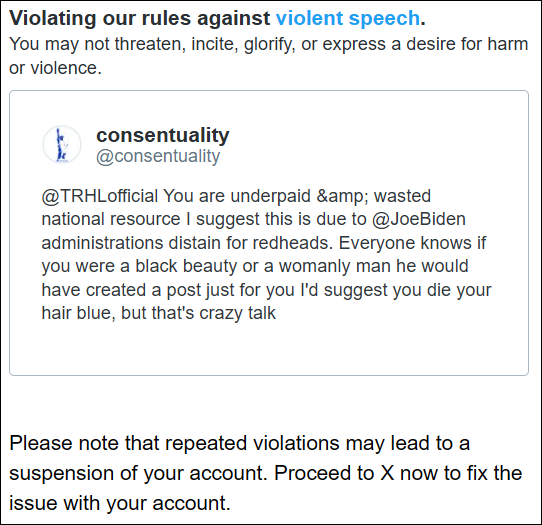

Die you bastard

Now I would advise you not tell tell anyone to go and die. Firstly thats not nice and secondly it’s not nice. It’s not something one says to another in a civil conversation and it will not progress any discussion positively. Now if one were dyslexic, tired or just having a blond moment then one might type something like “I’d suggest you die your hair blue”. That’s it, that’s violent speech and nothing anyone says can prove otherwise.

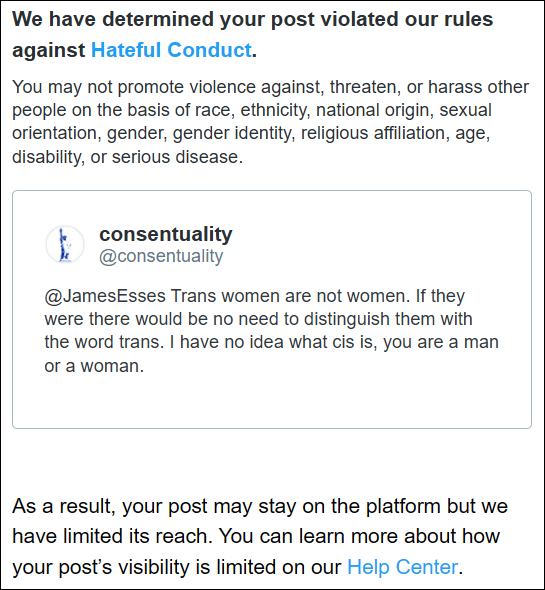

What is a woman?

Arguably the biggest question of our generation, there have been congressional hearings to discuss this issue. Even when the average person can provide historical evidence proving that in all spheres of life men and women are not the same there are those people who will still claim that to be a woman all one has to do is say so.

If a man stands up and says “I am trans, I identify as a woman.” In the minds of some he can now magically compete against women, bear children and qualify for a cervical exam.

Querying the distinction between a trans woman and a woman is enough to get you labelled as hateful and yes another way to get a strike against your social score and one step closer to Elon Musk’s AI ban.

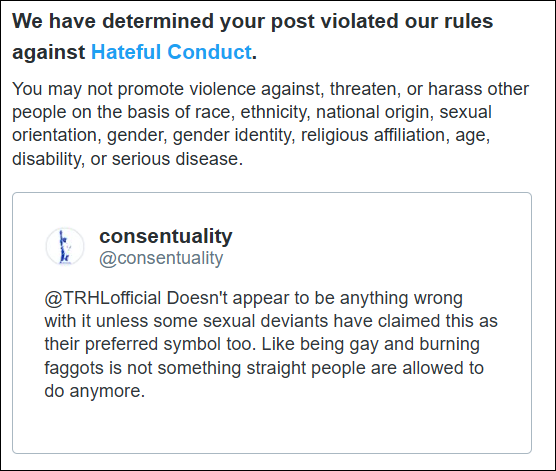

Burn the faggots

Now this one is a bit awkward as this word has been used by popular author Bernard Corwell in his book 1356. It’s a great read if you are into historical faction.

“They were going to burn her as a heretic,” Thomas said. “they’d already built the fire. they had piles of straw for kindling and they’d stacked the faggots upright because they burn more slowly. That way the pain lasts much longer” page 250-something or other

It appears that the AI is not well-read, in addition to not being able to understand the nuances of the English language.

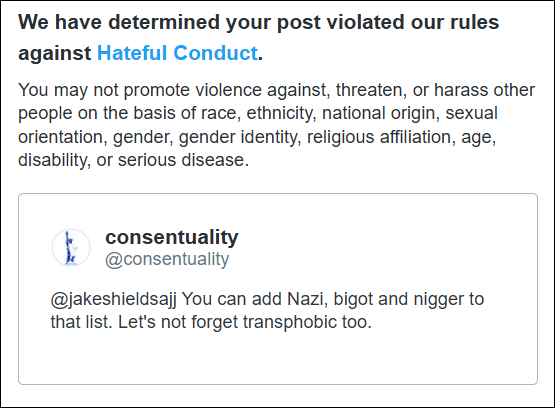

This piece of hateful conduct contains a subtle bit of logic. Did you spot the logic trap that an Artificial Intelligence is incapable of spotting? Then judge for yourself if the intention was to do anyone harm.

Call me what you want

This appears to be a self-own. In an age where people demand you use this or that term, mother is triggering and a period is something men have, it feels strange to me that an AI would target someone for hateful conduct against themselves. I believe the discussion was about calling people slurs during debates, It was a while back and X conveniently deleted all the evidence however I think it’s pretty clear I was not targetting anyone specific, but rather adding terms to a list of some sort.

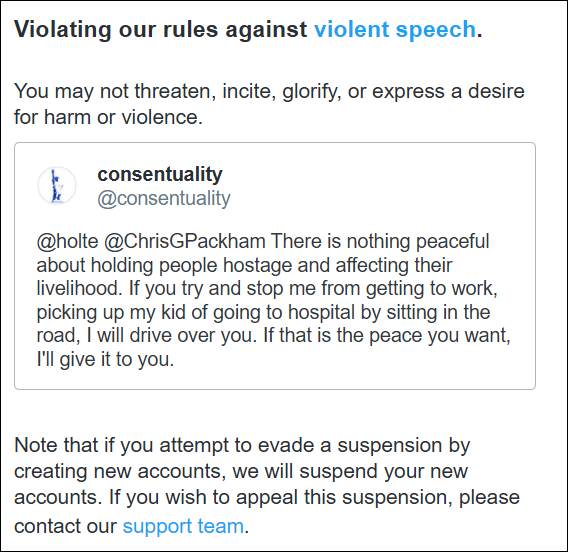

The one that broke the camel’s back

This is the big one, the tweet of all tweets, it encapsulates what I stand for, tests AI logic and asks difficult questions.

First some background.

Some consider Just Stop Oil a terrorist organisation, they certainly cause havoc and mayhem, and many people certainly fear coming across them.

For example

- 2m Damage caused by digging up roads

- Just stop oil actions lead to death

- Economic damage from 1 disruption event

- £750,000

- 709,000 drivers disrupted

- £1m in policing costs

- Put the public at risk by consuming police time

- £4.5m in 6 weeks or 13,770 officer shifts

- 1st Oct-14th Nov Met policing costs = £6.5m

- Prevent women from taking her baby to the hospital

On point 3 above, this is a gross underestimate. Even if Just Stop Oil caused one hour of disruption that would be a minimum of £7m for 709k people at £10ph.

The premise that Just Stop Oil is a friendly organisation trying to do its bit for humanity is a lie. Their actions by their very nature are forceful and violent. They lead to huge amounts of damage to the environment, people’s livelihoods, medical needs and childcare requirements.

Then there is the hypocrisy, these people use just as much if not more energy than the average person. They want to disrupt your holiday but are perfectly happy taking their own.

And let’s not forget your rights, you have the right to free movement and anyone who tries to unlawfully prevent that is committing the crime of hostage–taking which is by its nature a violent act.

Hostage-taking: A person, whatever his nationality, who, in the United Kingdom or elsewhere (a) detains any other person (” the hostage “), and (b) in order to compel a State, international governmental organisation or person to do or abstain from doing any act.

Which brings us to the tweet. You will notice the little but powerful word if. This is not a threat to do anyone harm, but rather a warning as to how I intend to protect myself should you wish to do me harm. This is permissible speech according to X’s own policy .

“This approach allows many forms of speech to exist on our platform and, in particular, promotes counterspeech: speech that presents facts to correct misstatements or misperceptions, points out hypocrisy or contradictions, warns of offline or online consequences …”

Appealing

All efforts to get clarifications of and reasonings for these decisions have fallen on deaf ears. Either no one at X has the authority to review the processes of the AI, no one has the logic to see how flawed the system is or worse, no one cares because I am one small voice and the complainer is a paying customer. If you are a company, no matter what your ethos is, you must support the paying customer. This appears to be the flaw in Elon Musk’s free speech platform, those who pay, get a say, while those who don’t, don’t. This is not so much a free-speech platform as a pay-for-speech platform.

After appealing to the AI, and then customer support who told me to appeal to the AI I took a last-ditch stab at salvaging my dignity with a letter to the CEO, which went unanswered.

I have now accepted my fate and decided to leave this last message to humanity as a warning of what’s to come.

Consequences to me

So what are the consequences of this ban?

Well, I got some time back as I no longer felt the need to use X. This may or may not diminish your experience depending on whether you were one of my 204 loyal cult followers. Cult being one of the many pejoratives thrown at me.

More worrying though is that my name has been rubbished, Mr Musk and his gang of free speech advocates have labelled me a violent hateful person and as such not worthy of a voice. Those who know me know this not to be true (take my word for it, lol), and then there are the other 8 billion odd people, many of which take the lead from what others tell them.

On the plus side sites like https://botsentinel.com/ no longer rates me as problematic. So that’s a win.

Consequences for society

The sad truth is as more AI creeps into our lives and decides what we can and cannot do, mistakes like this will have bigger and longer-reaching consequences.

In future, we can expect AI to govern more of our lives, and we may find that the number of goods available to us, our travel opportunities and even our job prospects are determined by a line of faulty code owned by a company that does not care.

Imagine being denied a job because you told someone to “die their hair” or being unable to access banking for questioning the difference between a woman and a trans-woman.

We embrace this technology at our peril, so learn from my mistake, save yourselves and learn to keep your mouth shut. Free speech comes at a cost.

X has been approached for comment.